CNC vision research log

← Back to Kevin's homepagePublished: 2019 Sept 14Last updated: 2019 Oct 30I’m playing around with computer vision in the context of CNC machining. Partly to improve my machining workflow and material efficiency, but mostly to goof with linear algebra and computer vision.

Scope + goals:

- Display CNC machine bed camera feed live within CAD/CAM software (Autodesk Inventor)

- CAD software as “UI” — no separate window or controls

- Be faster, easier, and more reliable than manual measurement (i.e., clamp stock anywhere, do all positioning in silico)

Note: This page isn’t a formal writeup — it’s a research log that includes lots of technical incantations, hacks, and dead-ends.

TODO

- Visual structure from motion http://ccwu.me/vsfm/

2019 Oct 30

Last month I realized that 2D was insufficient and I needed full 3D reconstruction. I’m aware of two possible broad directions:

- reconstruction from depth map captured by depth camera (possibly doable real time?)

- photogrammetry, 3D reconstruction from series of regular 2D camera images (definitely not realtime, but possibly more accurate?)

I first decided to explore the former. A newsletter reader pointed me to the 2011 Kinect Fusion research work. Googling “Kinect Fusion RealSense” led me to several implementations and related work:

RecFusion I tried this commercial library first because it had prebuilt binaries and looked promising. However, on my Windows 10 VM it failed to open with an OpenGL error.

RealSense OpenCV Kinfu: Looks promising, but maybe hard to compile (Kinect Fusion is a patented algorithm, so OpenCV must be recompiled with special flags). This 2016 Kinfu + RealSense demo makes it look accurate enough for my needs. Video author replying to a comment: “If you want an algorithm that works out of the box with RealSense, I recommend you look into more recent open-source solutions like RTABMAP, InfiniTAM, ElasticFusion, or others.” 2019 Kinfu with AzureKinect DK demo

ElasticFusion: I can’t use because it requires CUDA (not supported on my Mac Mini).

InfiniTAM: 2 years since last commit, but looks fast and promising; no obvious docs, so not clear how easy it’d be to get running.

RTABMAP: Looks very well documented, fresh commits. Most examples seem large scale (mapping buildings); not sure if that says anything about accuracy for my small use case.

BundleFusion. 2 years since last commit, only committers are paper authors, requires CUDA. Demo video.

FastFusion from 2014 uses depth maps with known camera pose information to do real-time CPU-only reconstruction. A YouTube comment (always reliable) remarks “still state of the art in 2019…nothing else can do this on CPU”.

Open3D: Docs reference research papers from 2015 and 2017, so maybe it works better than the 2011 Kinect Fusion work? More importantly, it’s well-documented, has fresh commits, and has tons of GitHub issues with responses.

Open3D

I started here because it was well documented and had out of the box RealSense camera support. Following the example in the docs I tried to scan a 3D scene consisting of a tape measure, metal doweling jig, and wooden rod:

I captured 1237 frames from waving my RealSense SR300 camera around and the steps below took about 12 minutes on my i7 Mac Mini (no CUDA GPU).

pip3 install joblib pyrealsense2

# record frames; had to edit source to request lower resolution 640x480 depth stream

cd V:\open3D\examples\Python\ReconstructionSystem\Sensors

python .\realsense_recorder.py --record_imgs

cd ..

python run_system.py config/realsense.json --make

python run_system.py config/realsense.json --register

python run_system.py config/realsense.json --refine

python run_system.py config/realsense.json --integrate

I used MeshLab to openup the resulting 187 MB model, which unfortunately turned out terribly:

Digging in more:

In this Github issue, a RealSense project member mentions that both Kinfu and Open3D reconstructions are quite sensitive to rotations.

An Open3D author also mentions that RealSense cameras are noisy and suggests the StructureSensor ($400 plus an iPad) or AzureKinect ($400). This YouTube video comparison between the AzureKinect and RealSense D435 pointclouds definitely makes the former look better. (Aside: it’s also rad to just see someone walking around a pointcloud in VR.)

Some interesting Open3D GitHub issues:

- Aligning pointcloud with 3D CAD model

- Using extrinsic camera pose. I.e., maybe reconstruction would be better if I put fiducial markers in the scene?

- Even the sample dataset reconstruction fails sometimes; perhaps due to multithreading or the use of RANSAC (which involves random sampling)?

2019 Sept 29

Video timestamps:

- 3:00 manual alignment using web browser

- 5:10 image composite editor from Microsoft

- 6:15 is perspective distortion unavoidable?

- 9:45 UDP streaming in Rust

- 11:50 RealSense camera API

- 15:00 ShopBot CNC controlled from Rust

- 18:20 Rust in Python

My main focus over the past week has been to take precisely located images of the CNC machine bed and combine them to form a high-resolution composite image. Work included:

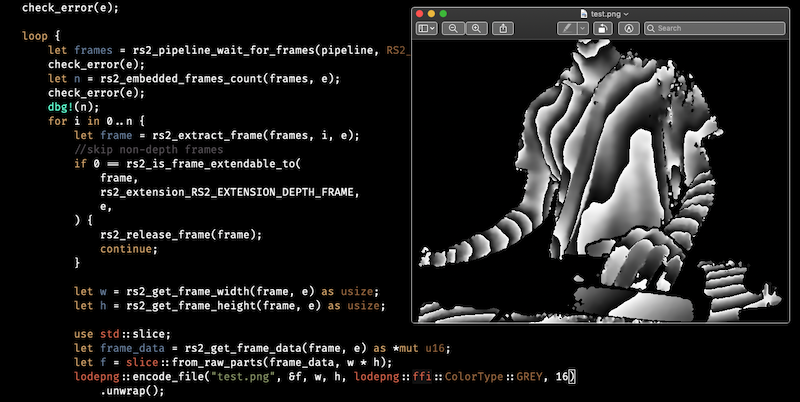

Controlling a RealSense SR305 depth camera from Rust, using bindgen to link against the librealsense C API. The RealSense camera API is comprehensive and has many nice options for controlling exposure, etc. — I’m very happy that I purchased this $100 camera rather than trying to work with consumer webcams.

Low-latency streaming of uncompressed frame data over UDP from Windows to Mac using the mio Rust library, exposing the streamed frames to OpenCV via PyO3, which lets me call Rust functions from Python.

Control of the ShopBot CNC machine from Rust via a Windows Registry-based API.

Background research on mosaic image stitching, in particular how to incorporate known camera positions into stitching algorithms.

- The Python bindings of OpenCV’s stitcher don’t expose the options necessary for changing the camera model from the default homography model (images can be related by perspective transforms; i.e., were taken from the same world space point) to an affine model (which is my case, where the camera translates between images). I didn’t want to dig further into code without knowing that I’d be able to get good mosaics at all so I decided to try and manually create a mosaic.

- I built a simple browser-based app to try and scale/transform images manually to eyeball what a composite might look like.

- Microsoft Research’s Image Composite Editor gave a decent result automatically (even without the image translation knowns).

Next steps

Based on the early stitching results, I think the inherent geometry of the setup will always give too much perspective distortion to yield a reasonable orthographic-looking composite image. The best way forward may be to embrace 3D geometry (using the depth sensor and/or structure from motion algorithms) rather than trying to construct an orthographic 2D composite.

Misc. notes

The textbook Computer Vision: Algorithms and Applications is available free online and looks comprehensive.

This StackOverflow question is exactly my problem; apparently the term of art I’m looking for is “ortho-rectification”; Wikipedia has some discussion w.r.t. aerial photography: orthophoto.

Copy/pasting a file from a Windows Parallels virtual machine to Mac will somehow lock or otherwise mess up the permissions of the file on the Windows side such that the file cannot be modified, deleted, etc. — to fix this, copy/paste some other file to free up the original. (Ask me how long it took me to figure that nonsense out…)

Coolest selfie this week from 16-bit depth accidentally written in reverse endian. See Twitter explanation:

2019 Sept 22

Updates since last week:

Repositioned Wyze IP camera to get more of a “top down” view of the machine bed.

Calibrated camera using a Charuco board, reaching 0.4 RMSE:

/opt/opencv-shared/bin/opencv_interactive-calibration -v="rtsp://root@192.168.1.7:8554/unicast" --h=5 --w=7 --sz=0.288 --t=charuco

Printed fiducials to use for machine positioning (eliminates manual corner selection from last week). I’m using strips of markers so things will be more robust to noise and potential occlusion due to clamps.

I generated a printable PDF of fiducials from this website, using Firefox dev tools to add precise margins and borders, which I then cut out with an x-acto and taped to the machine bed.

Pulled Shopbot position information out of a Windows Registry-based API (!!!???!) Thanks to the Shopbot team for quick email support and Graeme from Coogara Consulting for discussion of an alternative, Windows-app-UI-scraping approach for retrieving Shopbot coordinates.

Here’s how to pull Shopbot location info using Python 3:

import winreg k = winreg.OpenKey(winreg.HKEY_CURRENT_USER, r"Software\VB and VBA Program Settings\ShopBot\UserData") winreg.QueryValueEx(k, "Loc_1") # returns my X location: ('20.700', 1)

Next steps

Use RealSense SR305 depth camera instead of cheap Wyze IP camera. Since the RealSense uses USB 3, it’ll be attached to the CNC control computer, so I’ll need to write a low-CPU-overhead program that can stream data from the camera to my design computer.

Explore options for CNC-machinable fiducials (I mean, why the heck am I trying to carefully cut and tape paper to a machine that can easily cut stuff to within 200 μm?) I’m thinking some kind of drilled hole pattern in the spoilboard.

Mount a camera (or USB borescope?) to the gantry and create high resolution images by stitching together multiple frames.

2019 Sept 14

Current status:

- $26 Wyze Cam flashed with open source firmware streams RTSP video over wifi

- Python script pulls jpg from camera stream inserts into Inventor via COM API

- Machinist adds sketch points around known sized rectangle (e.g., sheet of paper on machine bed)

- Python script uses these points to calculate perspective transform, yielding an “overhead view” of the sheet, which is shown in a second Inventor sketch

- Machinist can then use this view to do measurements, setup CAM, verify tool/workholding clearances, etc.

Early explorations on computer vision and Inventor COM programming were done over a few pairing sessions with Geoffrey Litt; check out their notes.

I have a few technical constraints:

- works on Windows 10

- easy one-time install or (even better) a self-contained EXE binary

- ideally small size (< 10 MB)

- near term, Inventor-only; longer term should be extensible to other CAD programs (e.g., Fusion 360, SolidWorks)

and I’ve explored several technical architectures for this project.

Python + OpenCV

Since I’ve never been able to manage Python deps on Mac/Linux (every time I Google, there’s a new “solution”), I assumed it’d be terrible on Windows.

But then I found PyInstaller, which I got working in 2 minutes: pyinstaller --onefile my_program.py creates a self-contained EXE.

Yay!

The resulting EXE is 5 MB for a “Hello World”, but importing OpenCV balloons it to 50 MB, which I’m not thrilled about.

I’m using the win32com Python library. It works, but is slow — takes about 1s to read the coordinates of a few dozen points from an Inventor sketch. TBD whether that’s Python/COM stuff or inherent to Inventor inter-process COM.

For exploring Python and new libraries, it’s been helpful to have automatic code reloading.

This script automatically reloads the cncv module and runs/“benchmarks” its main function.

This lets me change code and immediately see the results without having to switch windows and restart anything manually.

import importlib

import time

import os

import cncv as target_mod

LAST_MODIFY_TIME = 0

def maybeReload(module):

global LAST_MODIFY_TIME

lt = os.path.getmtime(module.__file__)

if lt != LAST_MODIFY_TIME:

LAST_MODIFY_TIME = lt

try:

importlib.reload(module)

print("reloaded!")

except Exception as e:

# swallow exception so that reloader doesn't crash

print(e)

while(True):

maybeReload(target_mod)

start = time.process_time()

try:

target_mod.main()

except Exception as e:

# swallow exception so that runner doesn't crash

print(e)

dt = time.process_time() - start

# print(dt)

time.sleep(1)

Rust

Since the vision logic I needed is pretty basic linear algebra, I thought I’d try doing it in pure Rust, without OpenCV. I tried to cross compile from Mac to Windows following this post but it required copying some files from the Visual Studio install on Windows to my Mac — at which point I decided I might as well just compile on Windows.

I then tried implementing basic linear algebra in Rust. However, I found nalgebra quite frustrating: There are a lot of types to write out, which makes it much more verbose than Python/NumPy. E.g.,

let mut center = points

.iter()

.fold(Vec2::<f64>::new(0.0, 0.0), |c, x| c + x.coords);

center.apply(|x| x / points.len() as f64);

versus

center = np.mean(points, axis=0)

Also (because types) there’s no 8x8 Matrix constructor, so I gotta write:

let a = MatrixMN::<f64, U8, U8>::from_row_slice(&[1.0, ...])

compared to Python/NumPy’s

a = np.array([[1.0, ...], ...])

Another blocker in Rust was that after a few hours of research, I couldn’t figure out how to access a COM API.

I probably have to use winapi (which has a struct called CoInitializeEx, a COM thing), but docs aren’t great and my searches for COM “Hello world” examples on Github fell short.

C++ and OpenCV

I don’t know C++ and am not thrilled about the idea of picking it up. (I learned Rust specifically because I didn’t want to learn about C++ footguns.)

However both the Inventor SDK sample AddIns and many OpenCV examples are written in C++.

I first had to compile OpenCV with static libraries (I did all this on OS X, since I’m more familiar there and assume I could do it again in Windows if it seemed promising):

brew install cmake pkg-config jpeg libpng libtiff openexr eigen tbb

git clone https://github.com/opencv/opencv && git clone https://github.com/opencv/opencv_contrib

cd opencv && mkdir build && cd build

cmake -D CMAKE_BUILD_TYPE=RELEASE \

-D CMAKE_INSTALL_PREFIX=/opt/opencv \

-D OPENCV_EXTRA_MODULES_PATH=../../opencv_contrib/modules \

-D BUILD_opencv_python2=OFF \

-D BUILD_opencv_python3=OFF \

-D INSTALL_PYTHON_EXAMPLES=OFF \

-D INSTALL_C_EXAMPLES=OFF \

-D OPENCV_ENABLE_NONFREE=OFF \

-D BUILD_SHARED_LIBS=OFF \

-D BUILD_EXAMPLES=OFF ..

make -j

I then compiled this aruco-markers demo.

I had to add/update these lines in CMakeLists.txt:

set(OpenCV_DIR /opt/opencv/lib/cmake/opencv4)

find_package (Eigen3 3.3 REQUIRED NO_MODULE)

target_link_libraries(detect_markers opencv_core Eigen3::Eigen opencv_highgui opencv_imgproc opencv_videoio opencv_aruco)

then run

cd detect_markers && mkdir build && cd build && cmake ../ && make

to spit out a binary — it’s 37 MB, but upx shrinks it down to 16MB.

Another potential reason to go C++ is to compile an Inventor AddIn, which runs “in-process” in Inventor and may be faster than using the COM API.

Open questions

Rust / COM API: It’s gotta be possible. Can anyone point to an example (either in Rust, C, or C++)?

Better camera: I don’t know much about optics, but for the problem of precise measurement of still objects under controlled lighting, I think I just want as many megapixels as possible. What’s the most cost-effective to do that? My more detailed question on Computer Vision Reddit didn’t turn up any leads.

Next steps

Automatically detect machine plane using fiducial markers rather than relying on a machinist to manually place points. (But possibly add a “debug sketch” to allow machinsts to reposition or correct auto-placed points?

Calibrate Wyze camera

Test accuracy

Figure out how to map machine-space into vision-space. Add fiducial to spindle? Edge detect endmill position?

I’ll continue in Python+OpenCV since it’s working, which outweighs my (largely aesthetic) concerns about performance and distribution size.

Other notes

I bought a RealSense SR305 $79 coded light depth camera. I tried streaming USB from the control computer to the design computer using VirtualHere, but while the USB camera was detected on design computer, Intel’s viewer software didn’t pick it up. (The viewer does pick it up if the camera is directly connected.) Maybe because VirtualHere doesn’t support USB3?

To compile Rust on Windows, you need Visual Studio Build Tools C++ tools. There is way to download package in advance / offline install — it must be done in Visual Studio installer.

When compiling Rust, set powershell env variable so cargo compiles to VM desktop rather than network drive. (https://github.com/rust-lang/rust/issues/54216#issuecomment-448282142)

[Environment]::SetEnvironmentVariable("CARGO_TARGET_DIR", "C:\Users\itron\Desktop")

Also set powershell to be like emacs:

Set-Executionpolicy RemoteSigned -Scope CurrentUser

notepad $profile

and add this line:

Set-PSReadLineOption -EditMode Emacs

Inventor ships with an SDK installer (in C:\Users\Public\Documents\Autodesk\Inventor 2020\SDK), but the installer won’t unpack anything unless it detects Visual Studio — and it’s not forwards compatible!

I unpacked manually via

msiexec /a developertools.msi TARGETDIR=C:\inventor_sdk

so that I could access the header files and Visual Studio templates.

Trying to compile the sample Inventor AddIn failed with “RxInventor.tlb not found”, but changing InventorUtil.h to have this line:

#import "C:\Program Files\Autodesk\Inventor 2020\Bin\RxInventor.tlb" no_namespace named_guids...

resolved the issue, and the addin compiled and connected to Inventor.

Python COM will throw an exception if i.ReferencedFileDescriptor is accessed for SketchImage i while that SketchImage is being resized in Inventor.