Administrative notes

Tiny Letter (my newsletter infrastructure provider) is shutting down at the end of the month, so the next newsletter will likely look somewhat different. Don’t be alarmed.

Also: Apologies for sending out the last newsletter with a bunch broken links.

The former process (manually running copy($0.innerHTML) from Chrome DevTools) has now been replaced with a script which checks for relative links (which won’t work in an email) and then copies the HTML to the clipboard for me to send out to y'all.

Upcoming projects

Last summer in London I found myself with access to a severely underutilized Haas mill and had a go at exploring a bit of mixed round wood / 3d printed joinery:

I’d like to pick this back up in Amsterdam and make some furniture or houseware pieces.

It’s also a great excuse to make/modify a small CNC router (and compete with my friend Szymon).

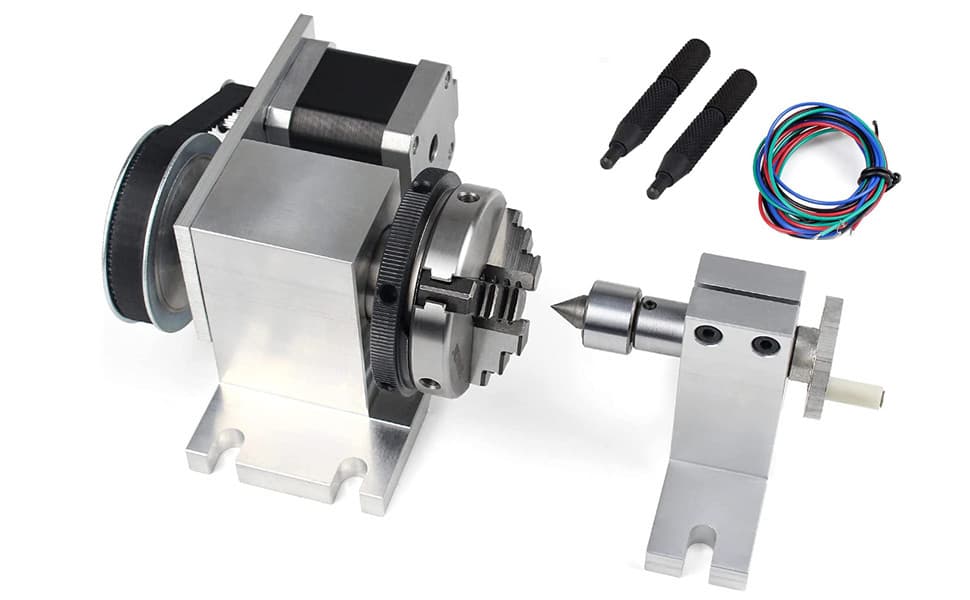

Since I just want to cut joints on round stock, the working volume can be small (10 cm cube). However, all of the inexpensive “bolt onto your CNC bed” rotary axis kits I’ve found consist of a non-pass-through chuck and tail stock:

so I may need to design my own clamping and indexing mechanism.

If you are interested in collaborating on either the furniture or machine design/build, please drop me a line!

Hobby PLC / modular electronics prototyping

I further explored some of the hobby PLC ideas we discussed in September. Between the Hacker News comments on my article, discussions with more experienced friends, and a lot of Googling, I found several notable existing solutions:

- TinkerForge is a small German company that makes pretty much exactly what I was thinking about: inexpensive (~$20–50) PCB modules that connect together and expose (via IP over USB / Ethernet / Wifi) functionality via decent programmatic and graphical interfaces (including out-of-the-box logging capabilities)

- MikroBus is a standard breakout board footprint with 1000+ boards; it seems like the typical use-case is to use a few boards on a single carrier PCB, though the interfaces still seem to be at the original chip’s level of abstraction rather than a unified communications protocol.

- Velocio are small ~$100 PLCs with a custom visual programming and UI builder and a handful of modules

- Companies like ODot Automation and Advantech sell solid-looking IO modules over RS-485 and Ethernet for ~$100–500; I’d look at these if I were doing a serious industrial / robotics / factory-environment project at the $10^3 budget level.

I really wish I’d run across these earlier, as they would’ve me countless hours of I2C / SPI / Arduino nonsense on past hardware projects.

Of course, finding that perfect solutions exist is no deterrent to the learning exercise of attempting my own anyway. I wanted to explore an EtherCAT-inspired protocol with the following constraints:

- minimal microcontroller/hardware requirements

- no custom communication ICs (this rules out USB, CAN, RS-485, ethernet, etc.)

- JLCPCB’s cheapest 32-bit MCU (the $0.48 stm32g030) should be able to act as a device OR controller

- suitable for < 1 W power applications (e.g., powered by USB power bank and solar)

- latency should scale linearly with IO

In particular I liked the idea of a daisy-chained network topology, which provides a natural automatic addressing scheme: The controller is connected to device 1, which is connected to device 2, etc. This would enable a simple “take the pieces from the box and assemble them together as needed” experience, without goofing around with assigning addresses to each device (via physical jumpers or flashing firmware), physically labeling devices, debugging address collisions, etc.

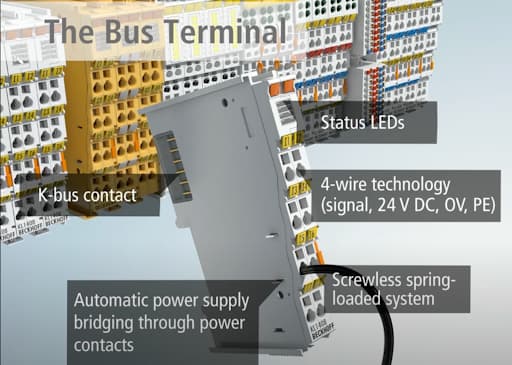

For the hardware, I wanted the PCB modules to physically “snap together” so I never needed to fuss with dupont wiring and breadboards. Ideally the vibe would be would be something like Beckhoff’s DIN-rail mounted bus terminals:

which connect physically and electrically when their plastic housings slide together. (ODot has a similar system.)

However, custom injection-molded plastic housings and metal connectors are a bit outside of my “cheap and cheerful, hobbyist-accessible” design constraints.

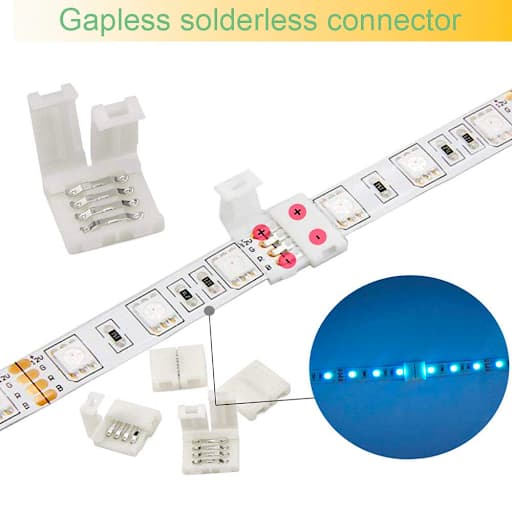

I posed the question to the venerable EEVBlog, where folks suggested I look into LED-lighting connectors designed to work with exposed copper pads (which are effectively free to create on a PCB):

Such connectors for flexible LED strips are appealing because they’re cheap, widely-accessible (next-day Amazon, AliExpress), and furthermore the clip design allows for boards to be pulled out of a chain without requiring its neighbors to move. (Handy if everything is rigidly mounted.) Unfortunately, these connectors are designed for thin strips, not 1.6mm thick PCBs.

For regular PCBs, the best connectors I could find were these KVX female-female card edge connectors:

though they’re spendier (~$1 each) and require boards to be shifted in-plane for connection/disconnection. (I also discovered I’m not the first person down this path — there’s an Edgy Boards open source hardware project based around these connectors too!)

All that said, I haven’t really tested any physical connections or modular grid dimension ideas — most of my exploration thus far has been in the software domain.

My software work has been split across two code repositories:

- eui: An “Embedded User Interface” library which generates a web UI for editing data based on its type definition — think of the widgets in Chrome DevTools and game data debugger/editors (e.g., Bevy inspector) that let you view and poke at “raw” data.

- ucat: “MicroCAT”, an EtherCAT-inspired protocol for controlling a daisy-chain of embedded devices from a computer or another embedded device.

However, both of these are just “proof-of-concepts”, as I don’t currently have a serious context of use in which I could fully bake either of them. I’m reminded of Andy Matuschak’s reflection from his 2023 letter on good design being about removing “misfits” between requirements and forms:

It’s often hard to find “misfits” when I’m thinking about general forms. My connection to the problem becomes too diffuse. The object of my attention becomes the system itself, rather than its interactions with a specific context of use. This leads to a common failure mode among system designers: getting lost in towers of purity and abstraction, more and more disconnected from the system’s ostensible purpose in the world.

So, lacking an actual project to further push these ideas, I’m putting them down for the time being. I’m not sure if modular PCBs will ever be in the cards, but I wouldn’t be surprised if a future project has me revisiting auto-generated debug UIs and embedded network protocols…

Thoughts on Rust

Working on ucat forced me to finally grapple with async Rust, and I now appreciate what an impressive accomplishment it is. Previously in a desktop / web context, async felt like an annoyance — “what are these hoops I have to jump through?” (What Color is Your Function?), “just spin up another thread, it’s fine”.

But in a bare metal embedded environment where there are no threads, being able to coordinate, e.g., waiting across multiple timers and for external events with simple “straight line” code is incredible. Delegating all of the inherent state-machine bookkeeping to Rust’s async machinery is much nicer than writing it out manually.

The best introduction to async in an embedded context is this intro to lilos, a minimal async RTOS. See also the embassy async embedded framework, which is more popular and includes hardware code for common chip families like STM32, ESP32, and the RP2040. For broader context on the value of async, see Boats’s Let futures be futures.

However, as much as I appreciate Rust’s async machinery, working on this project I found myself running into many of the same frustrations I did back when I was developing keyboard firmware in Rust three years ago. Although I’m now more familiar with Rust’s more complex features — traits, associated types, syntax and proc macros, etc. — I’m still often left with the same feelings of frustration: Why is this so hard?

My most common frustration stems from two “good ideas” interacting poorly.

The first idea is the embedded ecosystem’s cultural norm of using the Rust type system to both:

- model the hardware’s capabilities

- enforce disjoint access/ownership of the hardware’s pins, peripheral instances, etc.

Largely this is a huge benefit: Instead of a crash or undefined runtime behavior, the compiler tells you “oh, you can’t call that method on pin 17 because the hardware can’t do that.”

Not just for fixed capabilities, either — the typestate pattern (phantom types) is often used so that when you, e.g., set a pin to be an InputPin, you can’t later set its voltage high or low (you have to set it back to be an OutputPin first).

However, these complex types interact poorly with a deliberate design decision of Rust itself: To infer types within functions, but require type to be explicitly written out in function signatures.

This manifests as a tremendous amount of paperwork when extracting code into a new function or async task.

For example, say we want to read a temperature sensor over an SPI bus.

Within a single main function, this is relatively straightforward (see esp32-hal if you’re following along at home).

First we do some general setup:

let peripherals = Peripherals::take();

let io = IO::new(peripherals.GPIO, peripherals.IO_MUX);

let system = peripherals.SYSTEM.split();

let clocks = ClockControl::max(system.clock_control).freeze();

Then set up the SPI bus for our sensor:

let mut spi = Spi::new(peripherals.SPI3, 4u32.MHz(), SpiMode::Mode0, &clocks)

.with_miso(io.pins.gpio10)

.with_cs(io.pins.gpio11)

.with_sck(io.pins.gpio12);

Finally we start a loop to read the sensor and print the results every half second:

let mut buf = [0u8; 2];

loop {

embedded_hal_async::spi::SpiBus::read(&mut spi, &mut buf)

.await

.unwrap();

Timer::after(Duration::from_millis(500)).await;

let temp_c = 0.25 * (u16::from_be_bytes(buf) >> 3) as f32;

log!("the temp is: {}", temp_c);

}

The trouble comes when we want to extract the latter two parts — the SPI setup and read loop — into a standalone async task. Doing so requires we painfully write out all of the types:

#[main]

async fn main(spawner: embassy_executor::Spawner) {

let peripherals = Peripherals::take();

let io = IO::new(peripherals.GPIO, peripherals.IO_MUX);

let system = peripherals.SYSTEM.split();

let clocks = ClockControl::max(system.clock_control).freeze();

spawner

.spawn(read_temp_task(

peripherals.SPI3,

&clocks,

io.pins.gpio10,

io.pins.gpio11,

io.pins.gpio12,

))

.ok();

}

#[embassy_executor::task]

async fn read_temp_task(

spi: esp32s3_hal::peripherals::SPI3,

clocks: &esp32s3_hal::clock::Clocks<'static>,

miso: esp32s3_hal::gpio::GpioPin<esp32s3_hal::gpio::Unknown, 10>,

cs: esp32s3_hal::gpio::GpioPin<esp32s3_hal::gpio::Unknown, 11>,

sck: esp32s3_hal::gpio::GpioPin<esp32s3_hal::gpio::Unknown, 12>,

) {

let mut spi = Spi::new(spi, 4u32.MHz(), SpiMode::Mode0, &clocks)

.with_miso(miso)

.with_cs(cs)

.with_sck(sck);

let mut buf = [0u8; 2];

loop {

embedded_hal_async::spi::SpiBus::read(&mut spi, &mut buf)

.await

.unwrap();

Timer::after(Duration::from_millis(500)).await;

let temp_c = 0.25 * (u16::from_be_bytes(buf) >> 3) as f32;

debug!("temp: {}; bytes: {:?}", temp_c, buf);

}

}

(Note: this is actually an abbreviated version — in practice DMA is required, but I felt that writing out the clocks and GPIO pins was sufficient to convey the point.)

There’s a ton of friction in discovering and writing out these types, which were all previously “hidden” from us thanks to local type inference.

What bothers me more than the friction, though, is that writing out the types makes the situation into farce: The programmer is writing a function (a mechanism for abstraction and reuse), whose arguments are all owned singleton types — thus making the function neither abstract nor reusable.

In theory, reuse is achievable using generics. However, in practice:

Microcontrollers often have many seemingly arbitrary, complex constraints stemming from their internal chip layout. E.g. SPI 3’s MISO signal can only be routed to GPIO pin 4 or pin 7. These relationships would need to be spelled in the generic types, manifesting as complex signatures. It’d be something like:

async fn read_temp_task<Spi, DmaChannel, MisoPin, CsPin, SckPin>(

spi: Spi,

clocks: &esp32s3_hal::clock::Clocks<'static>,

dma_channel: DmaChannel,

miso: MisoPin,

cs: CsPin,

sck: SckPin,

) where

Spi: SpiInstance<DMA = DmaChannel>,

MisoPin: Into<InputPin> + MisoPin<Instance = Spi>,

CsPin: Into<OutputPin> + CsPin<Instance = Spi>,

SckPin: Into<OutputPin> + SckPin<Instance = Spi>,

I understand how we got here, but I find the end result discomforting. It feels like I have a Sudoku solver which tells me when I’m wrong, but still requires me to fill in all of the cells myself.

Also: I don’t want to play Sudoku.

I’m reminded of what Andy said:

The object of my attention becomes the system itself, rather than its interactions with a specific context of use.

When Rust asks me to make types explicit so that it can analyze the correctness of each function on its own, it’s forcing me to pay attention to it rather than to reading my temperature sensor.

I’m not saying Rust is wrong to do so — its designers are trying to make a systems language suitable for massive teams and codebases, balancing compiler performance with analysis tractability and a million other things. I’m simply noticing that is forcing me to pay attention to its needs, and that makes me wonder points in the broader design space that might be less needy.

I.e., can we statically prove safety considerations like disjoint runtime peripheral access, without the bureaucratic overhead of explicitly writing out all of the types and their relationships in every function signature?

In my Pinfigurator language I explored using a full-on SMT solver rather than the “solver” of the Rust type system. Looking back, I’m still quite pleased with how both the ideas and initial prototype turned out, so it may be due a revisit…

Another alternative is to have a programming language that approaches type/ownership analysis differently. For example, rather than constraining generic types upfront with trait bounds:

trait FooTrait {

fn foo(&self) -> usize;

}

pub fn f<T: FooTrait>(x: T) -> usize {

x.foo() + 1

}

so that functions can be analyzed “on their own”, a language could instead analyze only driven by specific contexts of use.

The Lobster language takes this approach, analyzing functions (at compile time) only when they are called, and only based on the concrete arguments. E.g., it’s perfectly fine to define a function simply as

def f(x): x.foo() + 1

and the x will be an (implicit) generic type.

As long as you only call f with types that have a foo method, you’re golden.

No need to write out a FooTrait or make up a one-letter name for the generic type of x.

There are obvious downsides, of course, (e.g., you can’t tell from just the signature what x is supposed to be), but I suspect, for my programming needs anyway, this part of the design space would spark more joy.

(A fun, though likely cursed, project could be to bootstrap a language that “compiles down” into Rust and just writes out the types for you.)

Finally, although not my favorite “solution”, a pragmatic approach to deal with any kind of bureaucracy is, of course, ChatGPT. I find it particularly helpful in Rust, as I can check whether the generated code matches my intent and rely on the Rust compiler to catch incorrect/nonexistent types, method names, etc.

I was initially skeptical about the AI hype, but several friends encouraged me to pay for GPT-4 and keep trying to write better prompts, and I’m glad they did as I now find it quite useful! In the spirit of paying it forward, here are some example conversations so you can judge for yourself:

In rust I’d like to use the

smolcrate and write an integration test between two async tasks: acontrollerand adevice. These tasks communicate over serial ports and the tasks take theembedded_io_async::Readandembedded_io_async::Writetraits as arguments. Can you please scaffold an example sync function that I can use as a basis for my tests? transcript

Relying on just the methods of the Rust PAC crate for the stm32g030, can you please write a function that configures a DMA transfer to forward one byte at a time from UART1 RX to UART2 TX? After this function has been run, the device should forward anything received over UART1 to UART2 without CPU involvement. transcript

The async rust book says: “Some functions require the futures they work with to be Unpin. To use a Future or Stream that isn’t Unpin with a function that requires Unpin types, you’ll first have to pin the value using either

Box::pin(to create aPin<Box<T>>) or thepin_utils::pin_mut!macro (to create aPin<&mut T>).Pin<Box<Fut>>andPin<&mut Fut>can both be used as futures, and both implementUnpin.” Why is the functionBox::pinused to make somethingUnpin? The name seems backwards. transcript

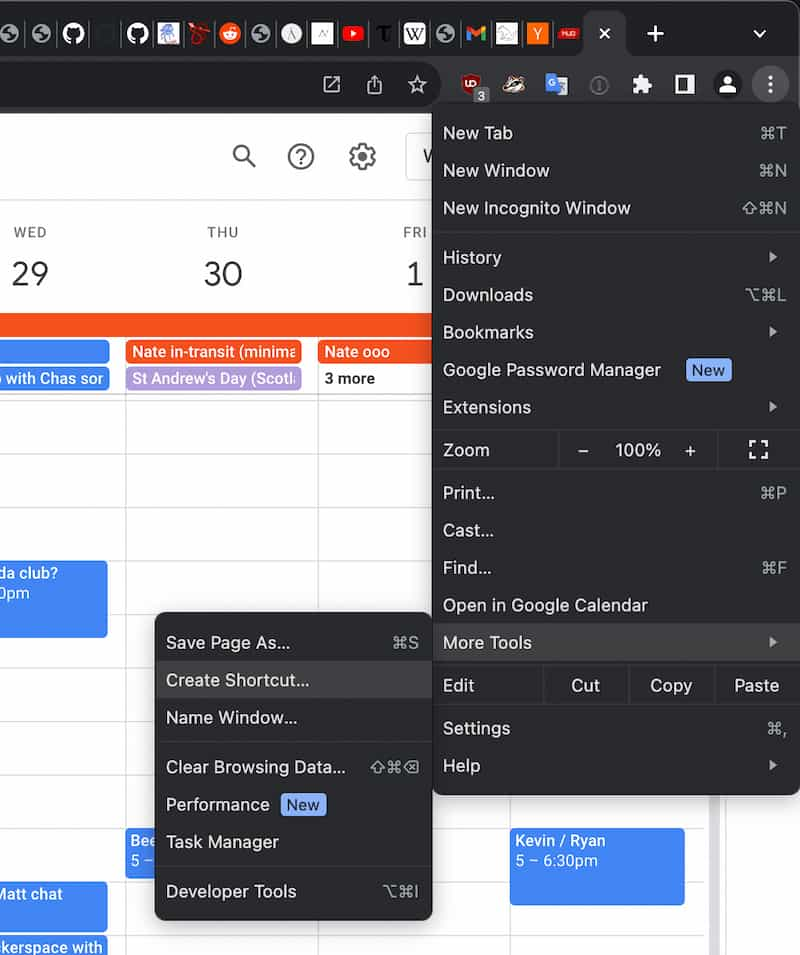

Google Calendar tip

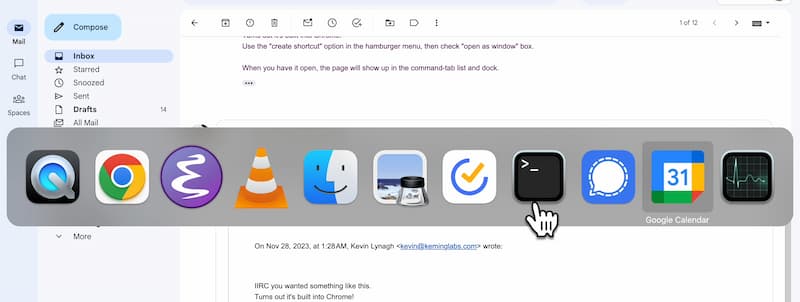

You can pop out Google Calendar into a “desktop app” that will have its own window and entry in the Dock and Command-Tab switcher. I find this useful for keeping my calendar on a specific monitor and switching to it quickly:

Marc Levinson - The Box: How the Shipping Container Made the World Smaller and the World Economy Bigger

Super fun read! I wasn’t surprised about returns to scale — bigger ships mean lower costs-per-container — but I’d never considered the downstream consequences of ships needing to sail as much as possible to service their debt, leading to port consolidation and massive boom/bust pricing cycles.

Highlights:

Long Beach had struggled through the 1950s after the pumping of oil from beneath the harbor caused the harbor floor to subside and docks to collapse. When the mess was finally cleaned up, the city-owned port found itself with a deeper harbor than Los Angeles.

The huge sums borrowed to buy ships, containers, and chassis required regular payments of interest and principal. State-of-the-art container terminals meant either debt service, if a ship line had borrowed to build its own terminal, or rent, if the terminal was leased from a port agency. Those fixed costs accounted for up to three-quarters of the total cost of running a container operation, and they had to be paid no matter how much cargo was available.

In container shipping, quite unlike breakbulk, overcapacity would not diminish as owners temporarily idled their ships. Instead, rates would fall as carriers struggled to win every available box, and overcapacity would persist until the demand for shipping space eventually caught up

By one estimate, each day seaborne goods spend under way raises the exporter’s costs by 0.8 percent, which means that a typical 13-day voyage from China to the United States has the same effect as a 10 percent tariff.

in 2014, 46 percent of world container shipments moved through just 20 ports

Ken Kocienda - Creative Selection: Inside Apple’s Design Process During the Golden Age of Steve Jobs

I’ve never considered myself an “Apple fan”, but I’ve used trusty MacBook Airs for more than a decade and recently started using an iPhone, so it was fun to hear an inside view on the company’s product development process. I liked the emphasis on constantly iterating and create demos to keep ideas and discussions concrete. However I had a hard time understanding some of Kocienda’s other advice — e.g., how they dismissed “A/B testing” but then gushed about the “empathy” of making an in-house “game to find the best size for touch targets so it was comfortable to tap the iPhone display and accommodated people with varying levels of dexterity”. (Sounds like an A/B test to me!)

Highlights:

Exactly how we collaborated mattered, and for us on the Purple project, it reduced to a basic idea: We showed demos to each other. Every major feature on the iPhone started as a demo, and for a demo to be useful to us, it had to be concrete and specific.

Making demos is hard. It involves overcoming apprehensions about committing time and effort to an idea that you aren’t sure is right.

Persist too long in making choices without justifying them, and an entire creative effort might wander aimlessly. The results might be the sum of wishy-washy half decisions. [..] Developing the judgment to avoid this pitfall centers on the refined-like response, evaluating in an active way and finding the self-confidence to form opinions with your gut you can also justify with your head. It’s not always easy to come to grips with objects or ideas and think about them until it’s possible to express why you like them or not, yet taking part in a healthy and productive creative process requires such reflective engagement.

In my experience, this manner of culture formation works best when the groups and teams remain small, when the interpersonal interactions are habitual and rich rather than occasional and fleeting. The teams for the projects I’ve described in this book were small indeed. Ten people edited code on the Safari project before we made the initial beta announcement of the software, and twenty-five people are listed as inventors on the ’949 Patent for the iPhone.

Our leaders wanted high-quality results, and they set the constraint that they wanted to interact directly with the people doing the work, creating the demos, and so on. That placed limits on numbers.

Thanks

Thanks to:

- Sebastian Bensusan for thoughtful discussion on the jobs to be done of type systems and feedback on this article.

- James Waples, a Rust/EtherCAT expert who helped me tremendously in understanding EtherCAT and designing my own lil’ version. James is based in Scotland and is available for remote Rust work (his CV) and I highly recommend reaching out if you are in the market.

- Jeff McBride for helpful discussions on electrical communications protocols and suggesting I start with UART.

- Dan Groshev for feedback on this article.

Misc. stuff

I’ve written up Taiwan recommendations based on my time living in Taipei for the several friends who’ve asked me for suggestions.

GMail has a setting that lets you use it offline in the browser. I find this handy for reading/replying to emails while on airplanes.

Protocol Histories, an oral history of cubesats.

From Vexing Uncertainty to Intellectual Humility, a scholar on their schizophrenia.

Which word begins with “y” and looks like an axe in this picture?

The Bertinet Method: Slap & fold kneading technique (making bread)

Multi-Dimensional Analog Literals in C++ “Note: The following is all standard-conforming C++, this is not a hypothetical language extension.”

“Both popular media and a lot of tech circles tend to assume that “emoji” de facto means Apple’s particular font. I have some objections.”

“The medieval confession was motivated by the threat of torture. The modern version, a plea bargain, is motivated by the threat of a much more severe sentence if the defendant insists on a trial and is convicted. Like the medieval version, it preserves the form — every felony defendant has the right to a jury trial, a lawyer, and all the paraphernalia of the modern law of criminal defense — but not the substance.”

A Matter of Millimeters: The story of Qantas flight 32, on the cause of an engine failure.

#20 Een kijkje in de Bolletje bakkerij – Roggebrood, just an advertisement for dense bread that I love.

“The men had volunteered to be sealed inside the cave should Gibraltar fall to the Axis.”

No idea how I’ve missed Fernando Borretti’s blog until now, it’s great. See Language Tooling Antipatterns, Effective Spaced Repetition, Type Systems for Memory Safety, Why Lisp Syntax Works.

Henry Kissinger, War Criminal Beloved by America’s Ruling Class, Finally Dies

“Why is HSR so expensive? I will discuss three main groups of reasons: rail is suboptimal, HSR grading requirements are really tough, and steel-on-steel rolling is less perfect than you might think.”

“I want to take a moment to talk about one of the greatest user interface disasters in history: the shooting down of Iran Air Flight 655 by the U.S. Navy missile cruiser USS Vincennes (CG-49) over the Persian Gulf on July 3, 1988.”

“Here is the story of Rimac: About 15 years ago, a Croatian dude appeared on a DIY electric car forum I frequented. Like most people who showed up there, he wanted to convert an EV but didn’t know how.”

“It’s impossible for a programming language to be neutral on this. If the language doesn’t support [higher-kinded polymorphism], nobody can implement a Monad typeclass (or equivalent) in any way that can be expected to catch on. Advocacy to add HKP to the language will inevitably follow. If the language does support HKP, one or more alternate standard libraries built around monads will inevitably follow, along with corresponding cultural changes. (See Scala for example.) Culturally, to support HKP is to take a side, and to decline to support it is also to take a side.”